Paper Reading #21 :Human Model Evaluation in Interactive Supervised Learning

Reference

Authors: Rebecca Fiebrink,Perry R. Cook(Department of Computer Science and Department of Music) and Daniel Trueman ,Department of Music, Princeton University,Princeton, New Jersey, USA

Presentation: CHI 2011, May 7–12, 2011, Vancouver, BC, Canada.

Summary

Hypothesis

The paper presents study of the evaluation practices of end users interactively building supervised learning systems for real-world gesture analysis problems. The authors examine users’ model evaluation criteria, which spans over conventionally relevant criteria such as accuracy and cost, as well as novel criteria such as unexpectedness.

Contents

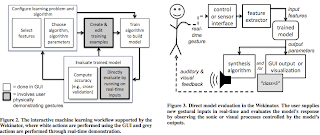

The researchers develop and test a software tool, called the Wekinator, that implements basic elements of supervised learning in a machine learning environment to recognize physical gestures and label them as a certain input. This application is particularly chosen because gesture modeling is one of the most common applications of machine learning and as well music naturally is gesture driven at times like recognizing a certain gesture as a certain pitch.They want to make both the judgments of algorithm performance and improve training models, in addition to providing more effective training data with the tool.

Methods

Three studies were conducted with people applying supervised learning to their work in computer music. In the first study (“A”)Seven participants met weekly for three hours each week for ten weeks where they discussed how they were using the software in their work, proposed improvements, asked questions, and experimented with the software. Notes of composers’ questions, suggestions, and discussion topics and implementation of suggested improvements were carried out. After the ten meetings, participants completed a written questionnaire about their experiences in the process and their evaluation of the software.

In the second study (“B”) 21 students using the Wekinator in an assignment focused on supervised learning in interactive music performance systems. The students received an in-class discussion and demo of the Wekinator and was asked to use an input device (USB controller, motion sensor, trackpad, or webcam) to create two gesturally- controlled music performance systems, one that employed a classifier to trigger different sounds based on each gesture’s label, and one that employed a neural network to create a continuously-controlled musical instrument (similar to Study A).

The third study (“C”) was a case study in which authors worked with a professional cellist/composer to build a gesture recognition system for a sensor-equipped cello bow.

Results

Students in Study B retrained the algorithm an average of 4.1 times per task (σ = 5.1), and the cellist in C retrained an average of 3.7 times per task (σ = 6.8). For Study A, participants’ questionnaires indicated that they also iteratively retrained the models, and they almost always chose to modify the models only by editing the training dataset. In all studies, retraining of the models was nearly always fast enough to enable uninterrupted interaction with the system.

In Study A, composers never used cross-validation. In B and C, cross-validation was used occasionally; on average, students in B used it 1.0 times per task (σ = 1.5), and the cellist in C used it 1.8 times per task (σ = 3.8).

Participants in A only used direct evaluation; participants in B performed direct evalu- ation an average of 4.8 (σ = 4.8) times per task, and the cellist in C performed direct evaluation 5.4 (σ = 7.6) times per task .There was no objectively right or wrong model for evaluating correctness.

Users found the software useful, highly agreed that the Wekinator allowed them to create more expressive models than other techniques.They held an implicit error cost function that variably penalized model mistakes based on both the type of misclassifications and their locations in the gesture space.

Discussion

The research was result oriented and it was clearly shown by various analysis methods and good results it produced. Machine learning is still in a young stage of development and these kinds of methods and results are encouraging in further applications and research.The way it was providing real time feedback or the way the training models can be changed in-between was what really impressed me.

Comments

Post a Comment